Threads and Promises

The material in these notes goes deeper than we can cover in class. If you're having issues with concurrency, parallelism, or asynchronous execution: read these notes! If you're having trouble with the difference between promises and threads, read these notes!

Motivating Concurrency

Let's go back to the hours queue dispatcher. You can find a more complete version of the example in the project linked at the beginning of these notes.

If we run the main method in the Main class of this new example, we'll get something like this (using TA names from a prior semester):

08:45:09:837 Dispatcher: Welcome to edu.brown.cs32.livecode.threads.TA hours!

08:45:09:839 Dispatcher: Hi Nim; you'll be seen by Erica

08:45:09:844 Erica says: Hello Nim!

Erica says: Goodbye Nim, I hope that helped!!

08:45:10:859 Dispatcher: Hi Alice; you'll be seen by Erica

08:45:10:859 Erica says: Hello Alice!

Erica says: Goodbye Alice, I hope that helped!!

08:45:11:865 Dispatcher: Hi Bob; you'll be seen by Erica

08:45:11:865 Erica says: Hello Bob!

Erica says: Goodbye Bob, I hope that helped!!

08:45:12:870 Dispatcher: Hi Charli; you'll be seen by Erica

08:45:12:871 Erica says: Hello Charli!

Erica says: Goodbye Charli, I hope that helped!!

08:45:13:876 Dispatcher: Hi Boatswain; you'll be seen by Erica

08:45:13:877 Erica says: Hello Boatswain!

Erica says: Goodbye Boatswain, I hope that helped!!

08:45:14:882 Dispatcher: Hi Bucky; you'll be seen by Erica

08:45:14:882 Erica says: Hello Bucky!

Erica says: Goodbye Bucky, I hope that helped!!

08:45:15:887 Dispatcher (6 helped so far): Nobody waiting in queue, will check again in three seconds.

Until we look at the timestamps, this seems fine.

This doesn't look so great. We have some problems to solve. If you look at the code, you might see other problems too. Here are three:

- Challenge 1: the dispatcher is waiting for an individual TA to finish helping a student before allocating the next TA. Lots of TAs will be idle, and students will wait a lot longer.

- Challenge 2: maybe we'd like to add TAs while the dispatcher is running.

- Challenge 3: how can new students join the queue?

All of these problems are related to today's topic: concurrency:

- We'd like TAs to be able to help students without holding up the dispatcher from allocating other students to other TAs. We need the dispatcher to run concurrently with the TAs.

- We need a way for the

Mainclass to calldispatcher.addTA()after the dispatcher starts running. But right now, there's no way for theMainclass to run independently of the dispatcher. We need the dispatcher to run concurrently with themain()method. - We need a way for new students to be added to the queue. This is the same problem as above!

Concerns like these pop up all the time in engineering. (The problem isn't just that the way I've written the dispatcher library is not very realistic.)

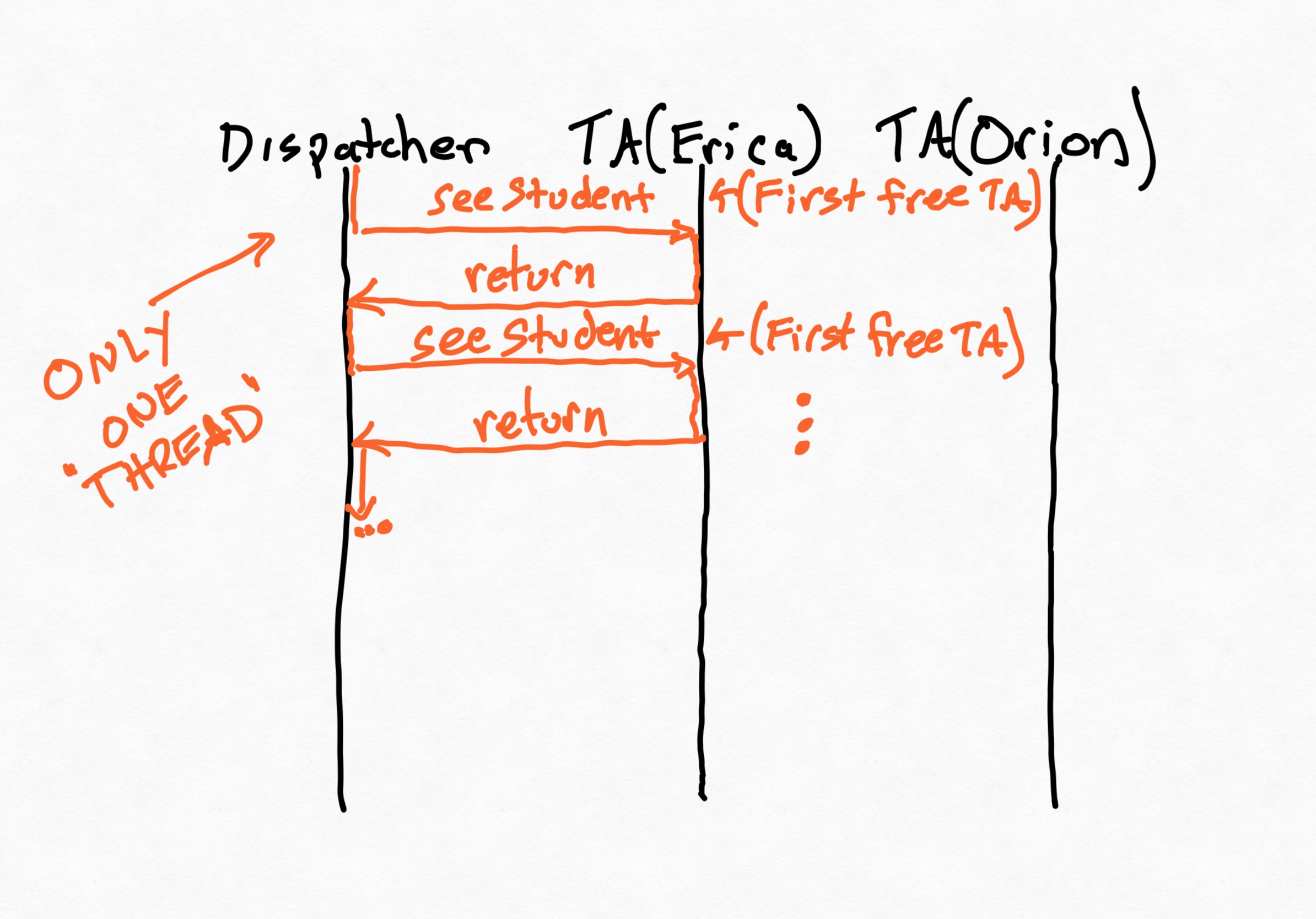

Here's a picture demonstrating the current state of affairs. These are called sequence diagrams, and they are very common in networking and distributed systems. Each vertical line corresponds to an entity (in this case, 3 different classes). Horizontal lines are messages or method calls. We can see the control flow, in a single thread, passing between the classes. Because Erica is always the first free TA in the list whenever the dispatcher gets to go, Erica is the only TA who gets to see students.

How does concurrency help us?

A program is concurrent if it involves multiple simultaneous threads of execution. Note that this doesn't necessarily mean that these multiple threads really are running at the same time, just that they are "executing". We will make a distinction in this class between concurrency and parallelism, where threads really are executing at the same time, usually on multi-core hardware or in the cloud. A parallel program is always concurrent, but the threads of a concurrent program may or may not actually be run in parallel.

To illustrate this idea, consider what your computer is doing right now. You're probably running more programs than you can count, even before you think about what your operating system is doing. How many CPU cores do you have? Probably not more than a dozen (and likely fewer). So not all of the concurrency that's happening can be parallelized: you'd need hundreds of cores for that! Instead, your operating system runs a scheduler program which allocates slices of time to different threads of execution.

Concurrency is more common than you might imagine. Because we're working with Java, every program you write is concurrent, even a "Hello World!" program. Why?

Think, then click!

The garbage collector!

Using concurrency to get what we want

Imagine logically splitting our big program into separate, independent "threads" of execution: one that runs the dispatcher, another that runs when a TA helps a student, and so on. We just need to separate them from each other, and help them communicate.

So far we've only had one thread: the one that starts up in our main method. How do we get another, and which should it correspond to?

Think, then click! (Image within...)

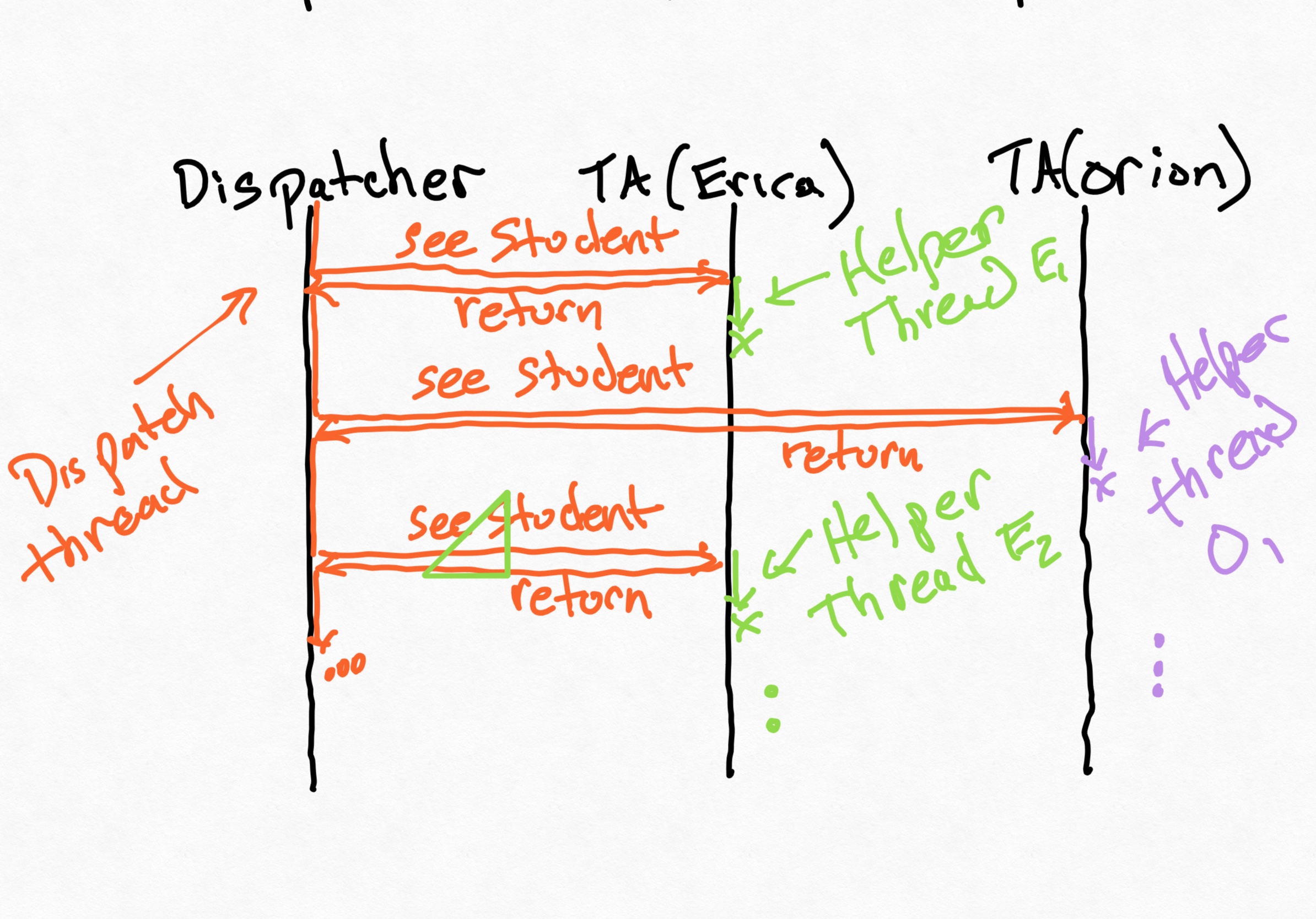

There are a few options. But let's start simply, and not try to solve all the challenges at once. We'll have every TA correspond to their own thread, and have those threads woken up by the dispatcher. it would look something like this:

The triangle is a drawing error I need to remove, but I ran out of time before class. :-)

Runnables and Threads

Java has an interface called Runnable, which requires the implementation of a run() method. It's also got a class called Thread, which has a constructor that accepts a Runnable and a method called start(). So, as a first cut, let's make TA implement Runnable, and whenever we dispatch a student to that TA, we run the thread.

public class TA implements Runnable {

// ...

public void seeStudent(Student student) throws TABusyException {

// ...

new Thread(this).start(); // NOT the same as .run()

}

// ...

@Override

public void run() {

// When the above .start() method is called, the *NEW THREAD* will execute this method.

}

}

Now, when we run, we'll see:

09:24:18:642 Dispatcher: Hi Charli; you'll be seen by Erica

09:24:18:647 Dispatcher: Hi Boatswain; you'll be seen by Orion

09:24:18:647 Erica says: Hello Charli!

09:24:18:647 Orion says: Hello Boatswain!

Orion says: Goodbye Boatswain, I hope that helped!!

09:24:19:652 Dispatcher: Hi Bucky; you'll be seen by Orion

09:24:19:652 Orion says: Hello Bucky!

Erica says: Goodbye Charli, I hope that helped!!

09:24:19:653 Dispatcher: Hi Nim; you'll be seen by Erica

09:24:19:653 Erica says: Hello Nim!

Orion says: Goodbye Bucky, I hope that helped!!

09:24:20:658 Dispatcher: Hi Alice; you'll be seen by Orion

Erica says: Goodbye Nim, I hope that helped!!

09:24:20:658 Dispatcher: Hi Bob; you'll be seen by Erica

09:24:20:658 Orion says: Hello Alice!

09:24:20:658 Erica says: Hello Bob!

09:24:20:658 Dispatcher (4 helped so far): Nobody waiting in queue, will check again in three seconds.

Orion says: Goodbye Alice, I hope that helped!!

Erica says: Goodbye Bob, I hope that helped!!

09:24:23:662 Dispatcher (6 helped so far): Nobody waiting in queue, will check again in three seconds.

Much better!

Concurrency vs. Parallelism (Again)

The TA thread and the main thread really are running separately. It's not clear whether they are truly running in parallel, though: the operating system and Java runtime decide that, in part based on how many cores the hardware has available.

What Could Go Wrong?

Concurrency seems really powerful. But are there any risks associated with it? Let's investigate.

Suppose we want to record how many students have been seen before the dispatcher terminates. One natural way to do this is by adding a static counter to the dispatcher class:

static int studentsSeen = 0;

Now, every TA can increment this counter in its run method, when the student has been helped:

HoursDispatcher.studentsSeen += 1;

If we tell the dispatcher to print this counter out, we'll see output like this at the end of the queue:

11:56:10 Dispatcher: Nobody waiting in queue, will check again in three seconds. So far we helped 6 students.

And indeed that's what we see. But let's see how this works at scale. Instead of using these names, we'll create a hundred TAs, and a few thousand students who need to be helped!

So that we can simulate helping so many students without waiting, let's reduce the delay time to help a student: students will be helped instantaneously! We'll also remove the printing in the TA class, since that slows things down.

We might see something like this:

12:10:52 Dispatcher: Nobody waiting in queue, will check again in three seconds. So far we helped 299993 students.

Uh oh.

What's going on? (By the way, this issue might be less likely to happen if we left the printing in.)

Think, then click!

I've made the classic thread safety mistake. Incrementing that counter isn't atomic: two threads might be trying to edit it at once. Suppose they both fetch the current value at once, add 1 to that value, and then write. If that sequence of operations happens, the counter will only be increased by one.

This sort of issue is pervasive in multi-threaded programming. Do the reading---you'll save yourselves a lot of pain.

How Can We Fix This?

The first approach is old-school synchronization:

synchronized (HoursDispatcher.class) {

HoursDispatcher.studentsSeen += 1;

}

This will tell Java that only one TA can be running that increment operation at a time. (The argument to synchronized helps disambiguate between multiple dimensions of synchronization we might have happening).

Another approach is to use thread safe objects from Java's standard library. In particular, I could have used an AtomicInteger for the counter:

static AtomicInteger studentsSeen = new AtomicInteger(0);

// ...

HoursDispatcher.studentsSeen.incrementAndGet();

Both of these approaches fix the problem. The key takeaways are:

- Having multiple threads of execution lets a program better separate responsibilities into different execution paths that run at the "same time", logically speaking.

- These threads might actually be run at the same time, but, depending on the operating system, they might be run by time-slicing (i.e., taking turns on one core).

- Concurrency can cause unusual bugs that don't always happen, or that happen differently across executions. Situations where the ordering of operations results in inconsistent behavior are called race conditions.

Asynchronous Execution

Let's get back to TypeScript. TypeScript has really convenient support for concurrency. Try these in the browser console:

console.log('1')

setTimeout(() => console.log('2'), 5000)

console.log('3')

But just under that surface, complexity lurks:

console.log('1')

setTimeout(() => console.log('2'), 5000)

console.log('3')

while(true) {}

What's happening here? JavaScript—a language built for the web—is a language whose design is deeply and unavoidably tangled with concurrency.

And yet, JavaScript itself (barring some modern extensions, which are best used for expensive tasks that would block important things like UI interactivity) is only single-threaded. We don't create a new thread to wait for a web request to finish. Instead, we create a callback, like we would for a button being clicked.

In principle, callbacks are called as soon as possible. But the definition of "as soon as possible" is complicated. The browser is in charge (or Node is, if you're running a backend server).

The Callback Queue

Because TypeScript is single threaded, it can't actually invoke a callback while it's running some other piece of code. It has to wait until that code finishes, and then it looks at its callback queue to see if it has callbacks waiting to be processed.

Every time a callback is registered (in the setTimeout example above, the 0-argument function that invokes console.log is a callback) it is added to the queue. Crucially, these calls will only ever take place if they're popped off the queue. And they're only ever removed when the code currently being executed is finished.

This will become extremely important when you start sending web requests from your frontend to your API server. Callbacks are not threads. Asynchronous execution is very closely related to concurrency, however.

Callbacks are not threads. Neither are promises, async functions, or anything else in the remainder of these notes.

Code Review Exercise

Let's look at some code and anticipate potential errors related to concurrency. (I've removed the types so that we can run this in the browser console.) What's the value that you expect to be printed by each block of code below, after its respective call is uncommented?

function example0() {

let toReturn = 0

setTimeout(() => {toReturn = 100}, 5000)

return toReturn

}

//console.log(example0())

function example1(){

let toReturn = 0

setTimeout(() => {toReturn = 500}, 0)

setTimeout(() => {toReturn = 100}, 5000)

return toReturn

}

// console.log(example1())

function example2(){

setTimeout(() => {console.log('A')}, 0)

setTimeout(() => {console.log('B')}, 5000)

console.log('C')

while(0 == 0) {}

console.log('D')

}

// example2()

Connecting to Sprint 2

Right now, you're working with file I/O. Because reading from or writing to a file can take time, file I/O is asynchronous in TypeScript. The async and await operators help us deal with asynchronous execution.

In class, we'll look at this informally in my own CSV parser code.

Next time, we'll introduce asynchronous execution in the context of making web requests.